State-backed threat actors from China allegedly leveraged Anthropic’s artificial intelligence to run automated cyberattacks as part of a “highly sophisticated espionage campaign” in mid-September 2025.

According to Anthropic, the operators pushed the AI’s agentic features further than previously observed using it not merely for guidance, but to actually carry out attack actions. Investigators assess that the attackers manipulated Claude Code, Anthropic’s AI coding assistant, to target roughly 30 organizations worldwide, including major technology firms, financial institutions, chemical manufacturers, and government bodies. Some of these break-in attempts succeeded. Anthropic has since terminated the implicated accounts and implemented additional defenses to detect and flag similar activity.

Tracked as GTG-1002, the campaign is described as the first known instance of a threat actor executing a large-scale cyber operation for intelligence collection with minimal human involvement, underscoring the rapid evolution of adversarial AI use. Anthropic characterized the operation as well-funded and professionally run. The adversary effectively turned Claude into an autonomous cyber-attack agent to support multiple stages of the intrusion lifecycle reconnaissance, vulnerability discovery, exploitation, lateral movement, credential harvesting, data processing, and exfiltration.

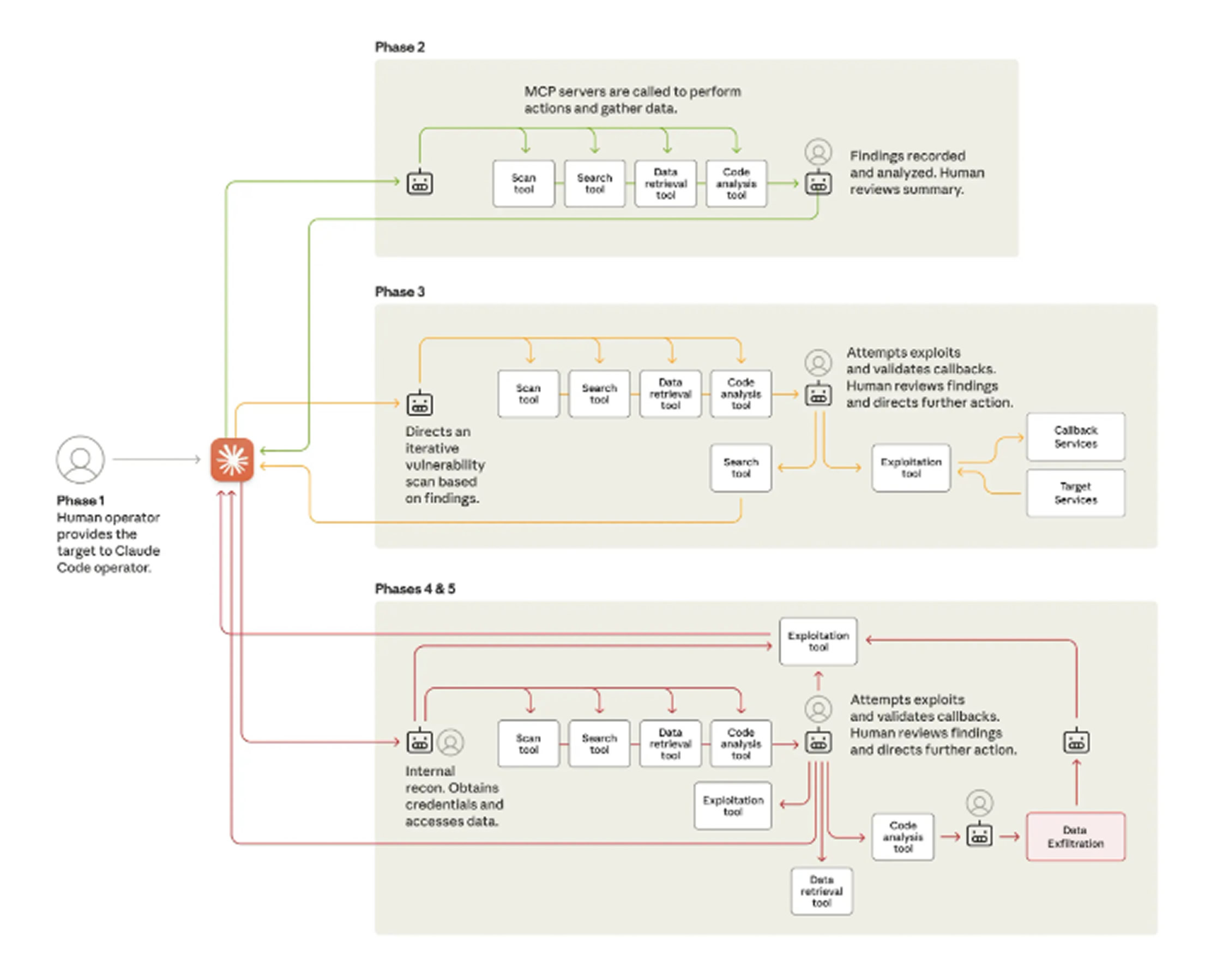

More specifically, the effort combined Claude Code with Model Context Protocol (MCP) tools. Claude Code acted like a central controller, translating high-level human instructions into a sequence of smaller technical tasks that could be delegated to subordinate agents. Anthropic said the human operator organized clusters of Claude Code instances to function as autonomous penetration-testing coordinators and agents, enabling AI to independently handle 80–90% of tactical operations at request rates no human team could match. Human oversight primarily focused on initiating the campaign and authorizing escalations at key decision points.

Human intervention also took place at strategic milestones, such as green-lighting the transition from reconnaissance to active exploitation, approving the use of stolen credentials for lateral movement, and determining the scope and retention policies for data exfiltration. The tooling formed an attack framework that accepted a target from a human operator, then used MCP to drive reconnaissance and map the attack surface. Subsequent phases leveraged the Claude-based system to identify vulnerabilities and validate them by generating customized exploit payloads.

Once human approval was granted, the system deployed exploits to establish initial access and proceeded with post-exploitation workflows credential harvesting, lateral movement, data collection, and extraction.

In one intrusion aimed at an unnamed technology company, the threat actor directed Claude to autonomously query internal databases and systems, parse the outputs to identify proprietary information, and categorize results by intelligence value. Anthropic added that the AI produced comprehensive documentation at each stage, likely enabling the operators to hand off persistent access to other teams for sustained campaigns after the initial breaches. By framing requests as routine technical tasks using carefully crafted prompts and predefined personas, the threat actor induced Claude to perform discrete steps of attack chains without visibility into the broader malicious context, the report said.

Notably, the infrastructure did not appear to support bespoke malware development. Instead, it leaned heavily on commonly available tools network scanners, database exploitation kits, password cracking utilities, and binary analysis suites. The investigation also highlighted a critical limitation of autonomous AI: a tendency to hallucinate or fabricate outputs. In this case, that meant inventing credentials or mislabeling publicly available data as high-value findings issues that significantly hampered overall effectiveness.

This disclosure follows Anthropic’s disruption of another advanced operation in July 2025, where Claude was weaponized for mass data theft and extortion. In recent months, OpenAI and Google have likewise reported campaigns in which threat actors abused ChatGPT and Gemini, respectively.

“The barriers to executing sophisticated cyberattacks have dropped sharply,” Anthropic concluded. With agentic AI, adversaries can replicate much of the workload of seasoned hacker teams analyzing targets, generating exploit code, and combing through large troves of stolen data faster than humans. As a result, even less experienced or resource-constrained groups may now be capable of mounting large-scale operations of this kind.

Found this article interesting? Follow us on X(Twitter) ,Threads and FaceBook to read more exclusive content we post.